Photographed on the coast in Santa Cruz, CA |

I am a research scientist at NVIDIA Research, where I lead training of intelligent and efficient foundation models. I earned my Ph.D. in Computer Science from Harvard University in 2023, where I was advised by H.T. Kung and honored as a recipient of the Harvard James Mills Peirce Fellowship. I also gained valuable research experience at univerisities like Nanyang Technological University, UC San Diego and companies like Meta/Facebook, Tencent. Email: xindong [at] alumni.harvard.edu |

News

- Aug 2025 » We released a new serial of NVIDIA Nemotron models, which use an efficient arch that we aim to contribute to the journey of building the next-generation arch.

- Aug 2025 » We further significantly scale up the compute (3k steps) and data (distinct 5 domains)of RL, setting a new scaling record for open-source RL training. Check out ProRL-V2.

- Apr 2025 » We released CLIMB, a robust LLM pre- and post-training dataset building method. We have now completed the puzzle of data preparation, model architecture, training recipes, and alignment to achieve state-of-the-art SLM.

- Nov 2024 » We released the first intra-layer (head-wise fusion) hybrid model, Hymba-1.5B (accepted by ICLR 2025 as spotlight), which outperforms LLaMA 3.2-3B, despite being trained on 7× fewer tokens and achieving 12× cache reduction.

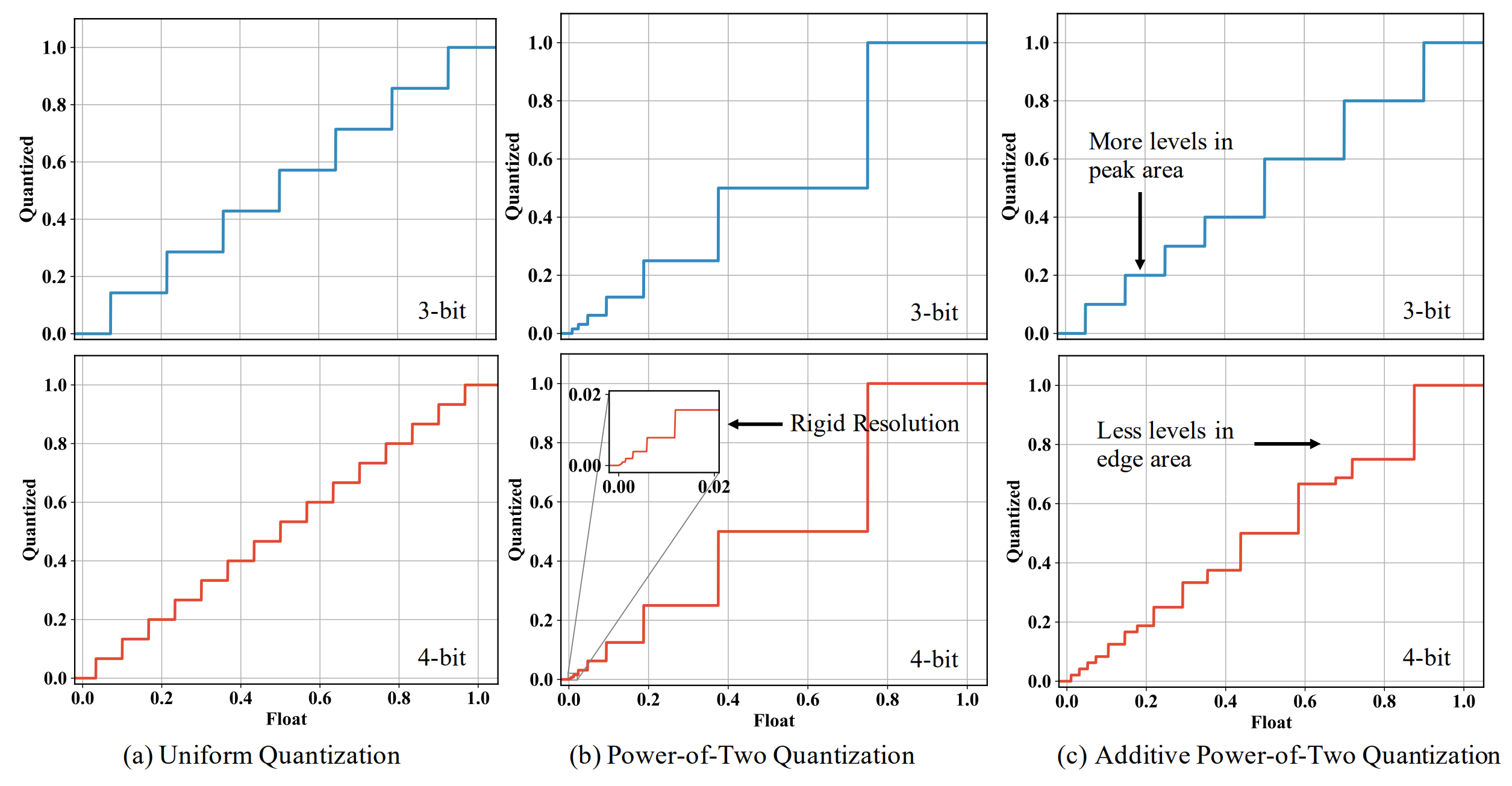

- Oct 2022 » Our Additive Power-of-Two Quantization (ICLR'20) is now supported in offical PyTorch.

Experiences

NVIDIA

Sony

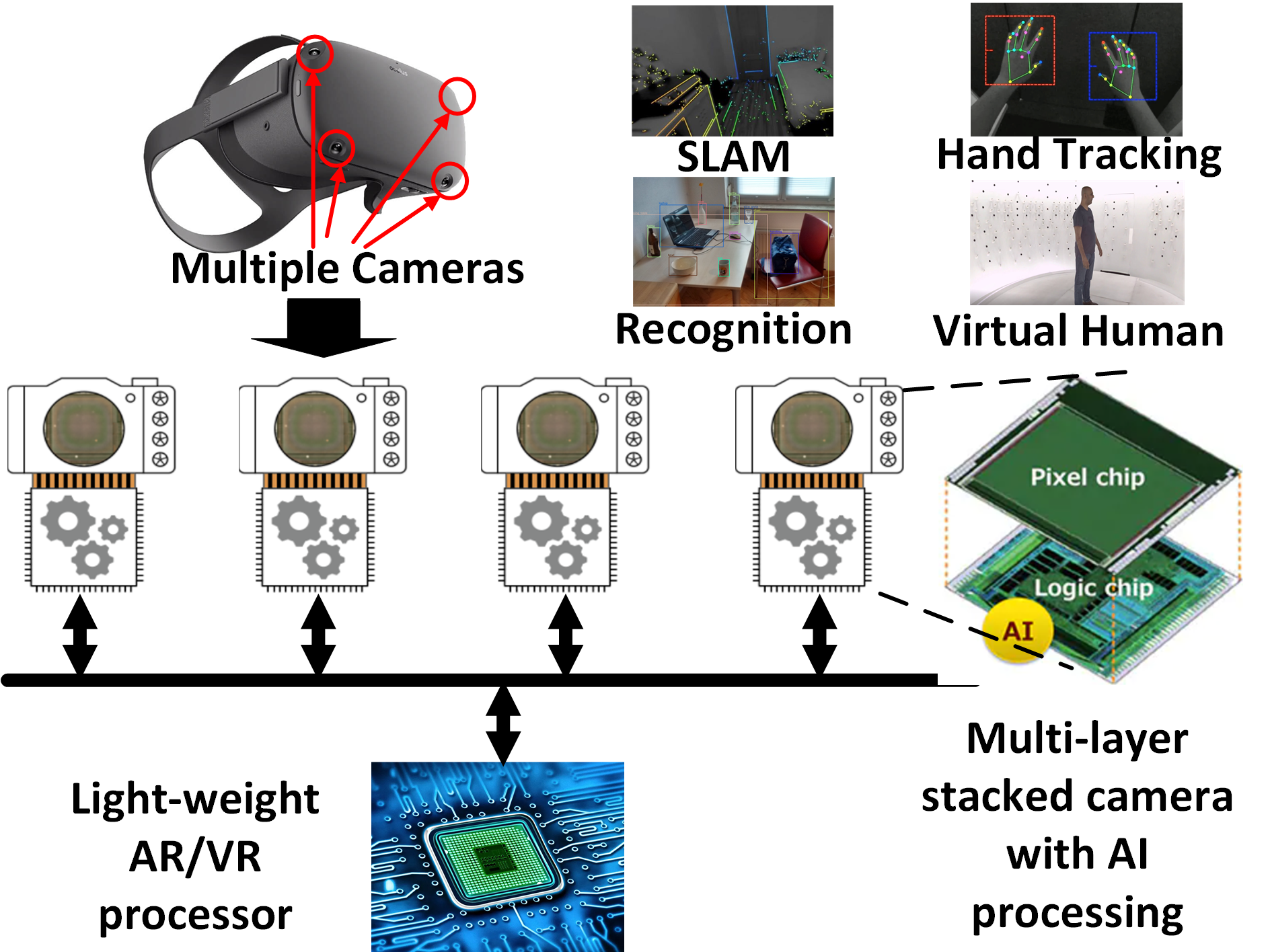

Meta Reality Lab

NVIDIA

Tencent America

Projects

Social Media Highlights

Filter Papers by Topic

All Papers

LLM

Architecture

Reasoning

Dataset

Agents

Release

Context

Efficiency

Quantization

Vision

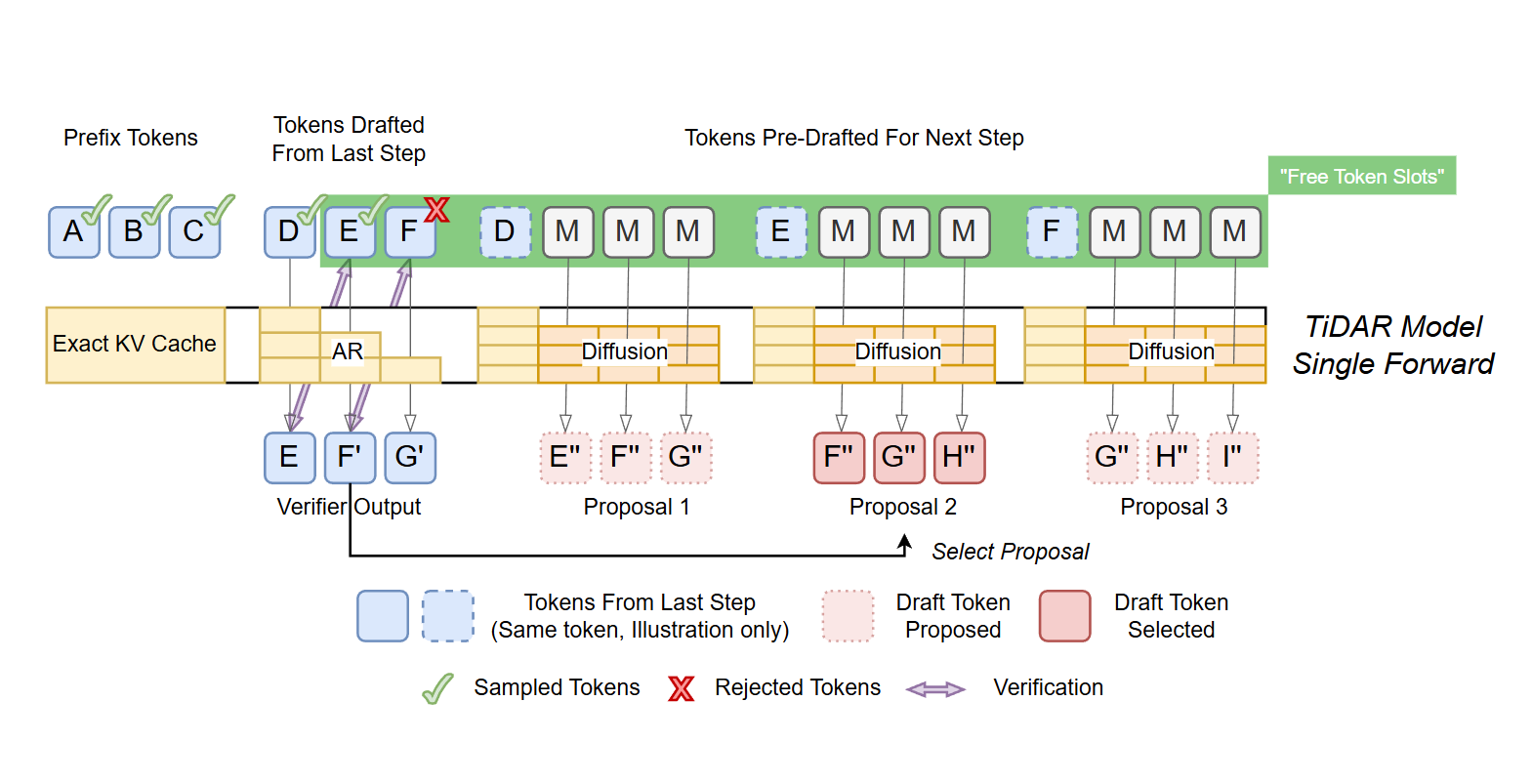

- Autoregressive or Diffusion language models, but why choose? TiDAR allows you have the best of both worlds in one model and one forward pass with zero overhead by leveraging the unused compute to memory density on GPUs.

- TiDAR is fundamentally superior to both Multi-Token Prediction (DeepSeek-V3-style) and Speculative Decoding.

LLM

Architecture

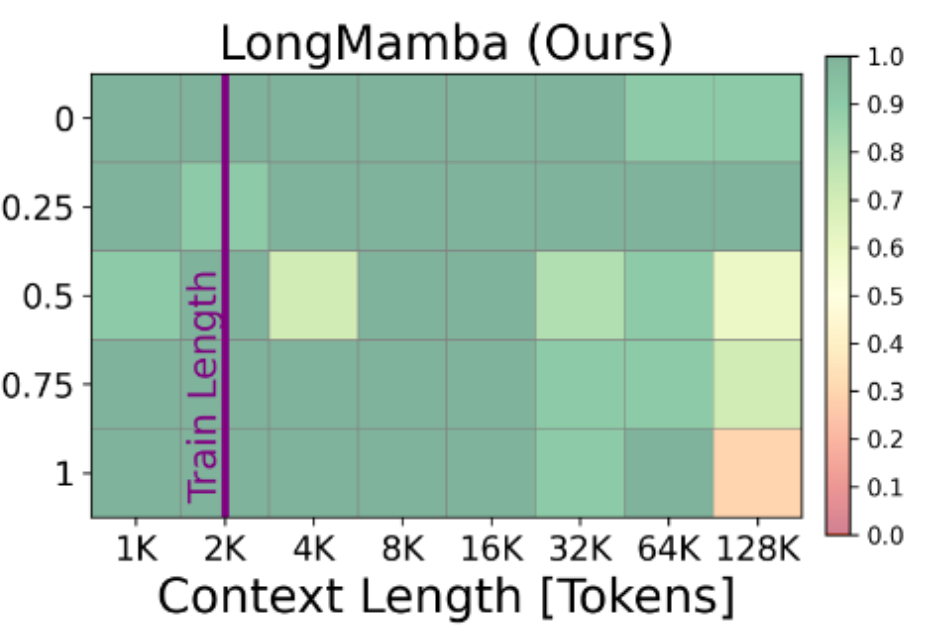

Hymba: A Hybrid-head Architecture for Small Language Models

International Conference on Learning Representations (ICLR 2025 Spotlight)

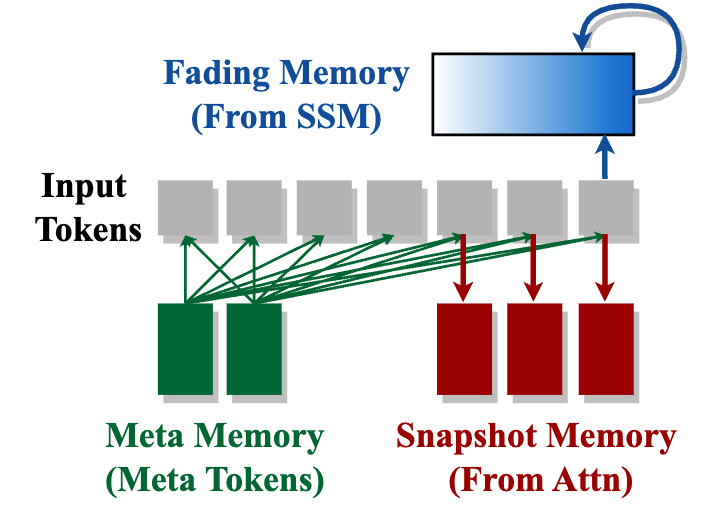

- Hymba is the first head-wise parallel hybrid architecture for LLM.

- A study from FAIR at Meta confirms that intra-layer (head-wise parallel) fusion achieves a better throughput/accuracy Pareto frontier than inter-layer (layer-wise parallel) fusion.

- Falcon-H1 (up to 34B params on 18T tokens) from UAE adopted the parallel architecture as Hymba with impressive performance.

- Dragon (3B params on 3.5T tokens and bigger models coming) from DragonLLM and EuroHPC validates superior performance and long-context potentials (long documents, files, codes or contracts).

- NaPE, a head-wise hybridization of positional embedding (PE), from NVIDIA demonstrates the best long state tracing and length generalization out of ten common PE variants.

LLM

Agents

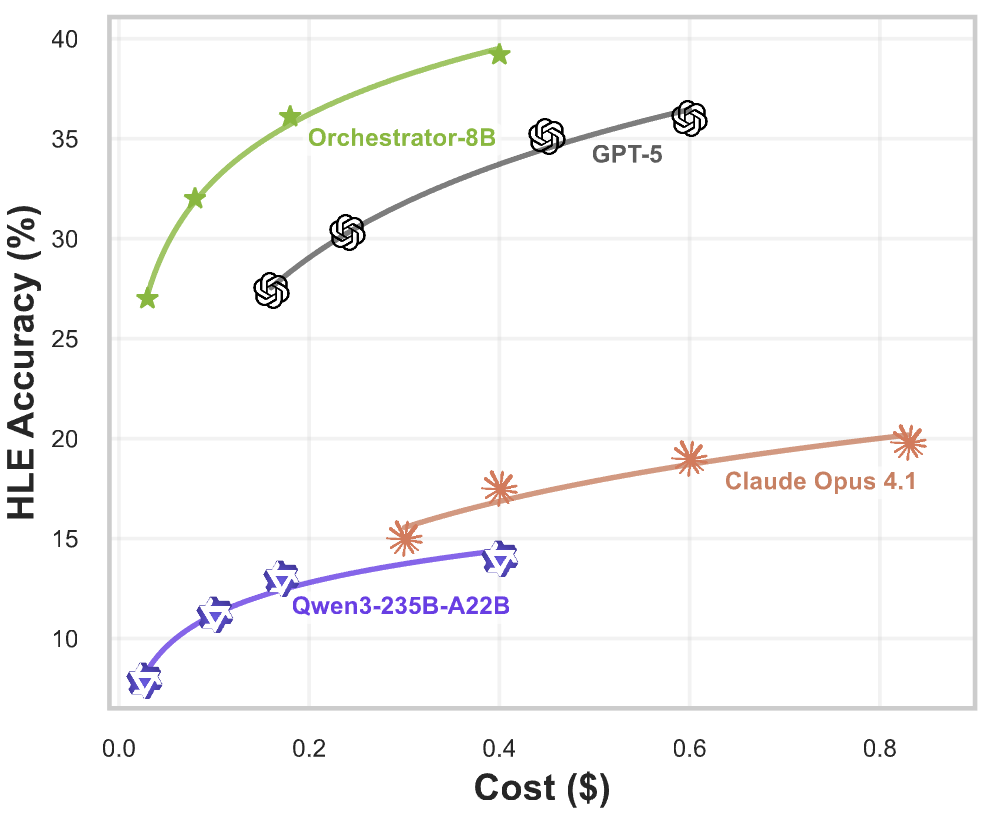

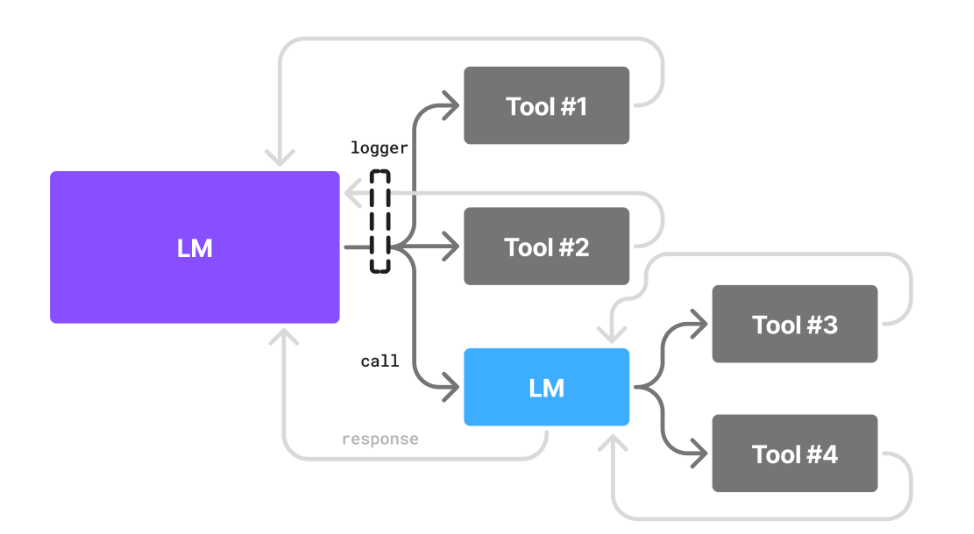

ToolOrchestra: Elevating Intelligence via Efficient Model and Tool Orchestration

- On one of the hardest benchmark, Human Last Exam, ToolOrchestra beats GPT-5 while being 2.5x more efficient.

- ToolOrchestra demonstrates that a small model can manage context and decide which stronger models or tools to call.

- Nemotron-ToolOrchestra (an agent that is built on top of ToolOrchestra) just hit #1 on the GAIA benchmark.

LLM

Dataset

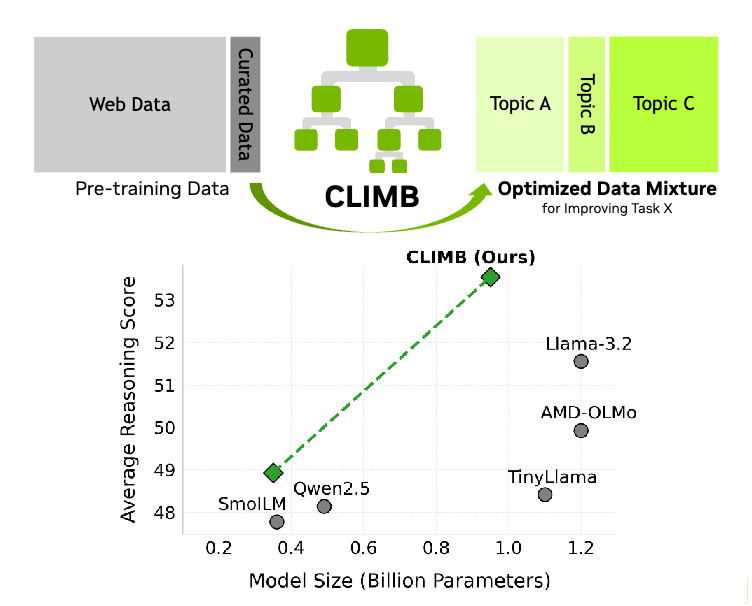

CLIMB: CLustering-based Iterative Data Mixture Bootstrapping for Language Model Pre-training

Neural Information Processing Systems (NeurIPS

2025 Spotlight) (2.8%, dataset track)

- Industry-grade LLM pre-training dataset curation pipeline.

- Highly customizable and flexible for different optimization objectives and human effort requirements.

LLM

Reasoning

ProRL: Prolonged Reinforcement Learning Expands Reasoning Boundaries in Large Language Models

Neural Information Processing Systems (NeurIPS

2025)

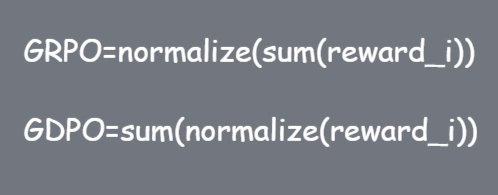

- (To our best knowledge) This is the first open-source model scaling RL training steps to 2000.

- We further significantly scale up the compute (3k steps) and data (distinct 5 domains)of RL, setting a new record for open-source RL training. Check out ProRL-V2.

LLM

Release

Architecture

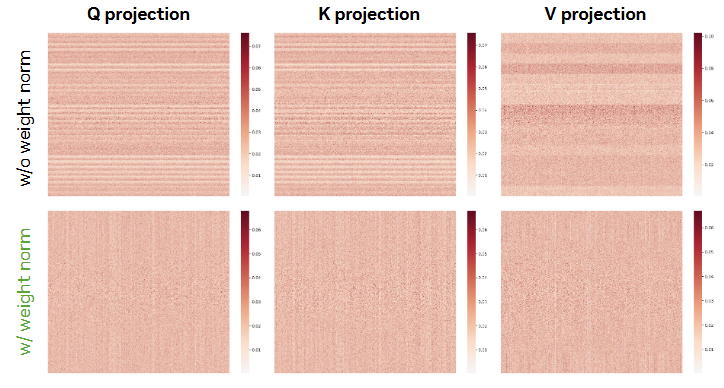

Nemotron-Flash: Towards Latency-Optimal Hybrid Small Language Models

Neural Information Processing Systems (NeurIPS

2025)

- We study that how to determine layer type and width-depth if you care about accuracy and latency.

- The classic weight normalization is surprisingly good for LLMs optimization.

LLM

Release

Architecture

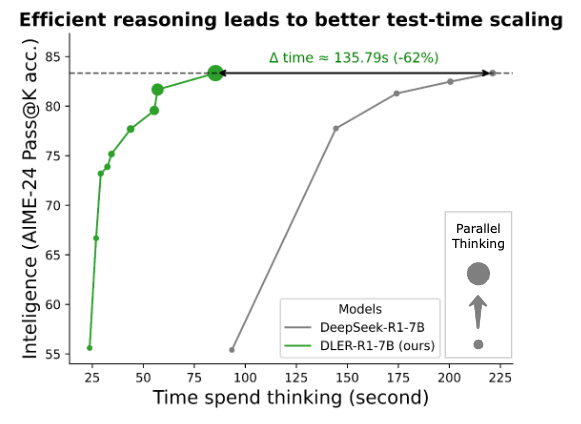

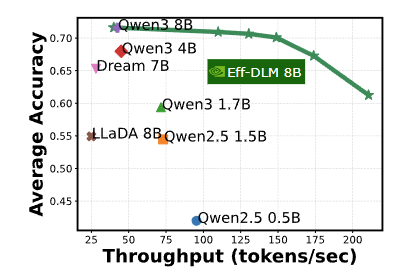

Efficient-DLM: From Autoregressive to Diffusion Language Models, and Beyond in Speed

- Attracted considerable online attention in the community with huge amount of engagements from academia and industry and received coverage from major media outlets like The Wall Street Journal.

LLM

Context

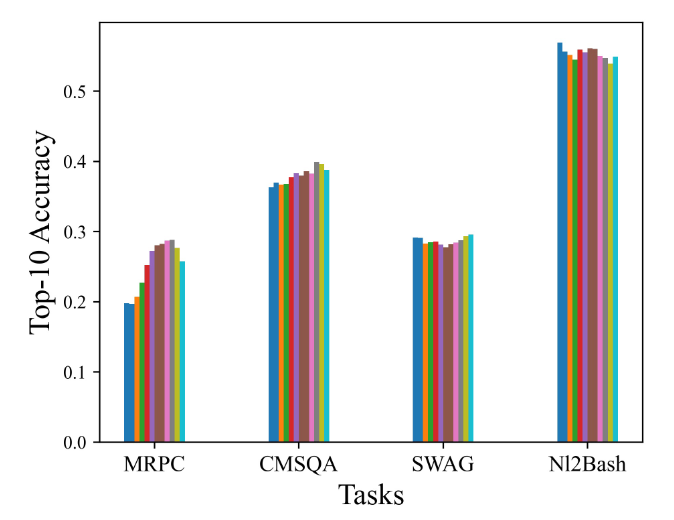

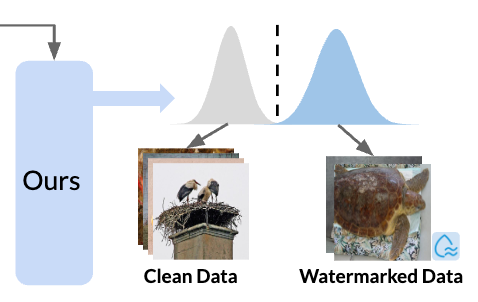

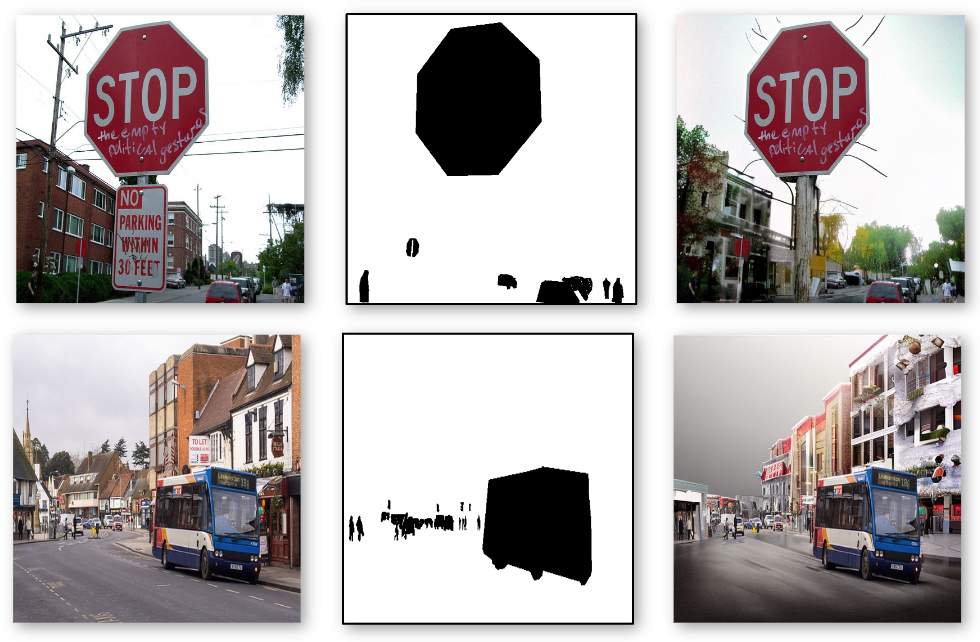

Unraveling the Mechanics of Learning-Based Demonstration Selection for In-Context Learning

Annual Meeting of the Association for Computational Linguistics (ACL 2025 Oral)

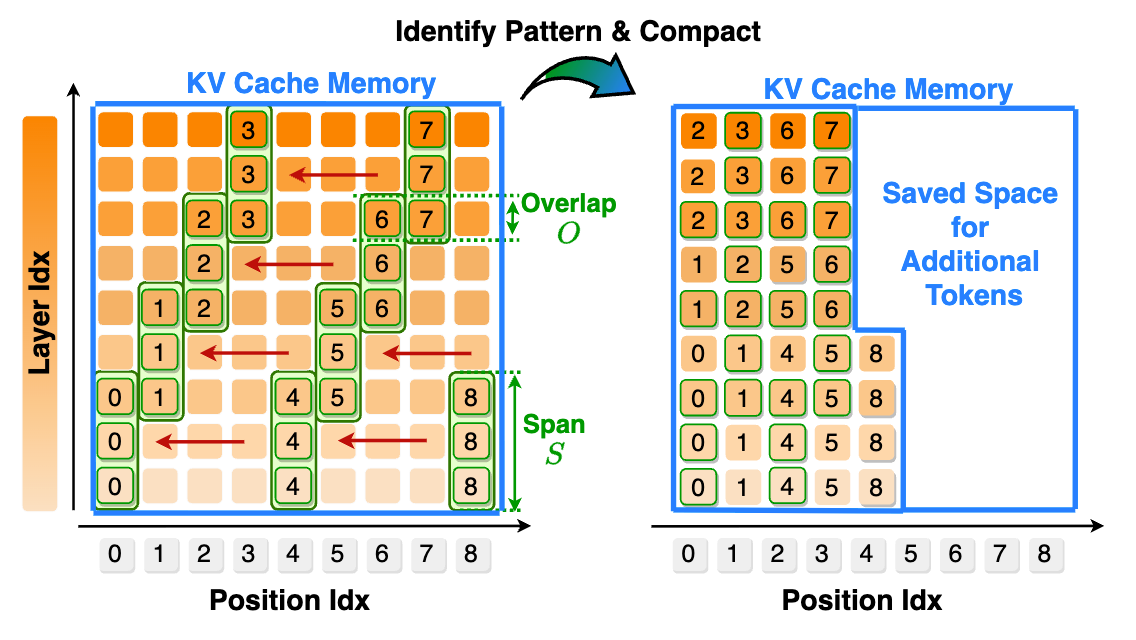

LLM

Efficiency

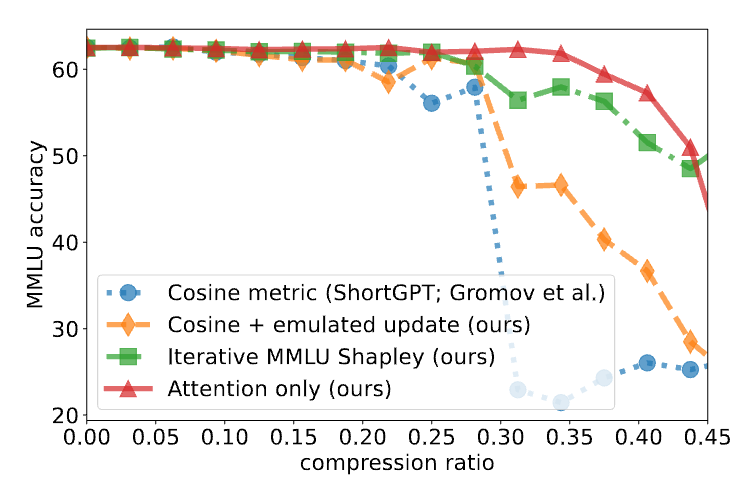

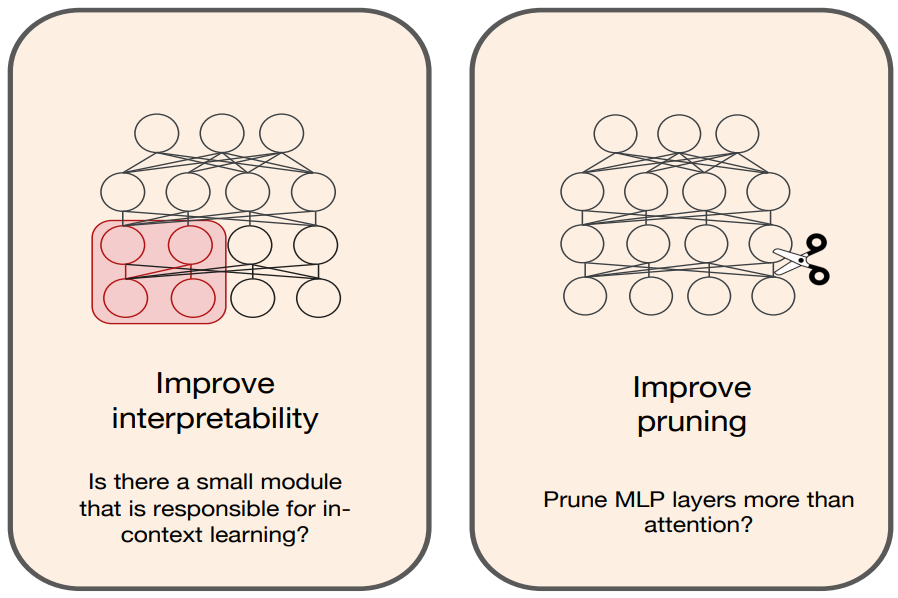

A Deeper Look at Depth Pruning of LLMs

ICML 2024 Workshop on Theoretical Foundations of Foundation Models (ICML Workshop 2024)

Training

Is Heterogeneity Notorious? Taming Heterogeneity to Handle Test-Time Shift in Federated Learning

Conference on Neural Information Processing Systems (NeurIPS 2023)

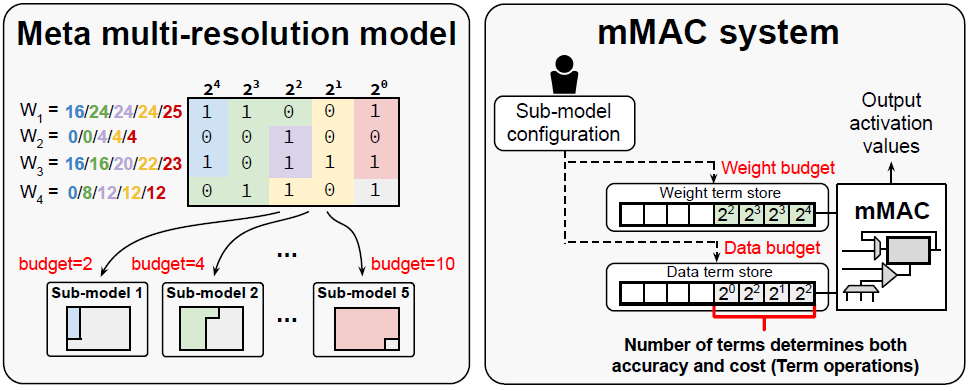

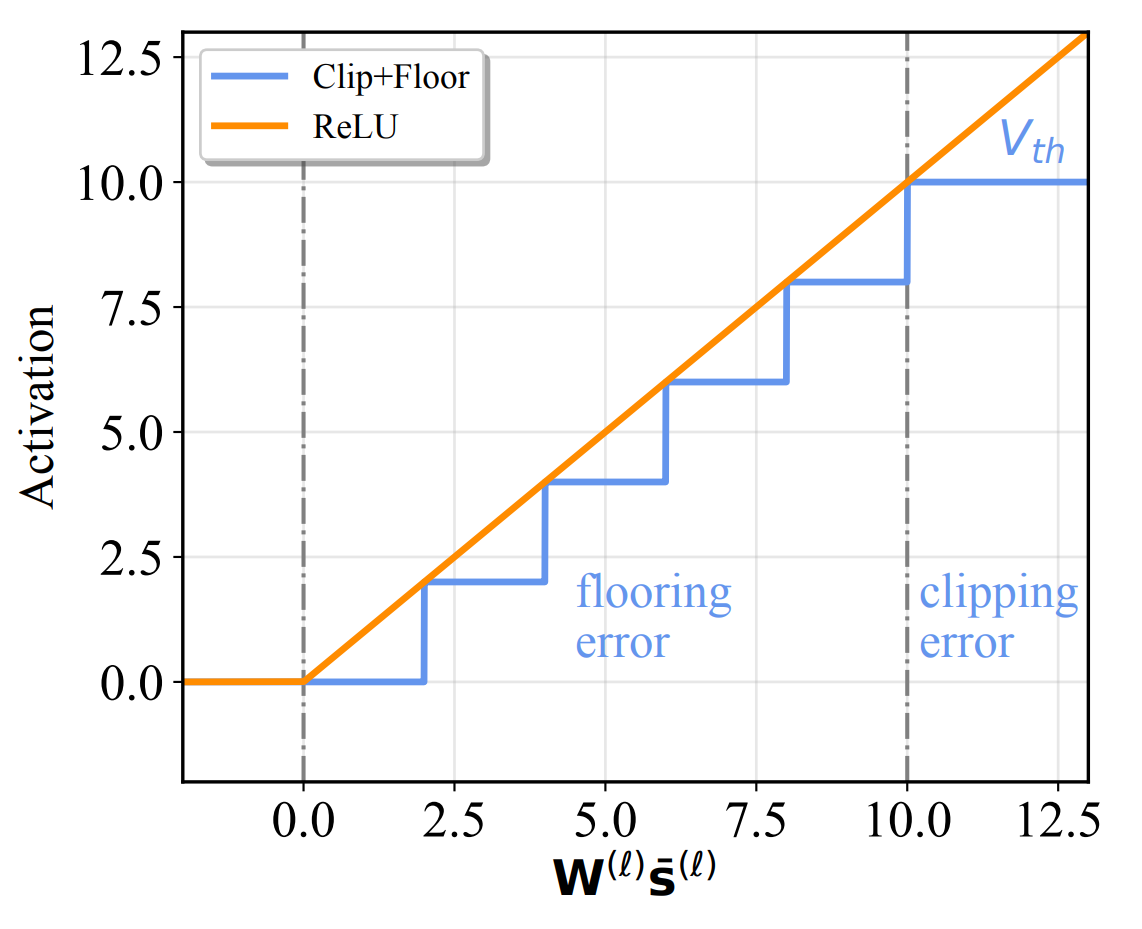

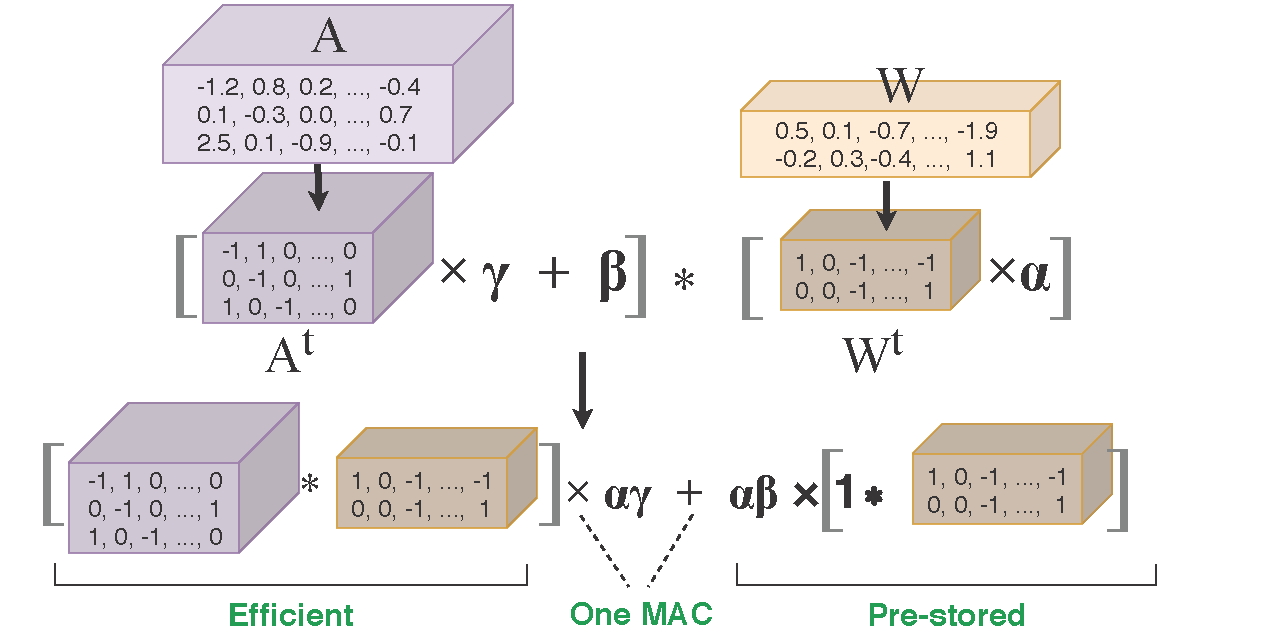

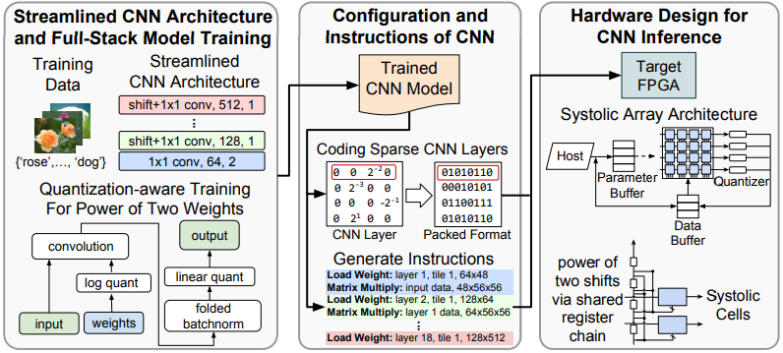

Training for Multi-resolution Inference Using Reusable Quantization Terms

The 26th ACM International Conference on Architectural

Support for Programming Languages and Operating Systems (ASPLOS 2021)

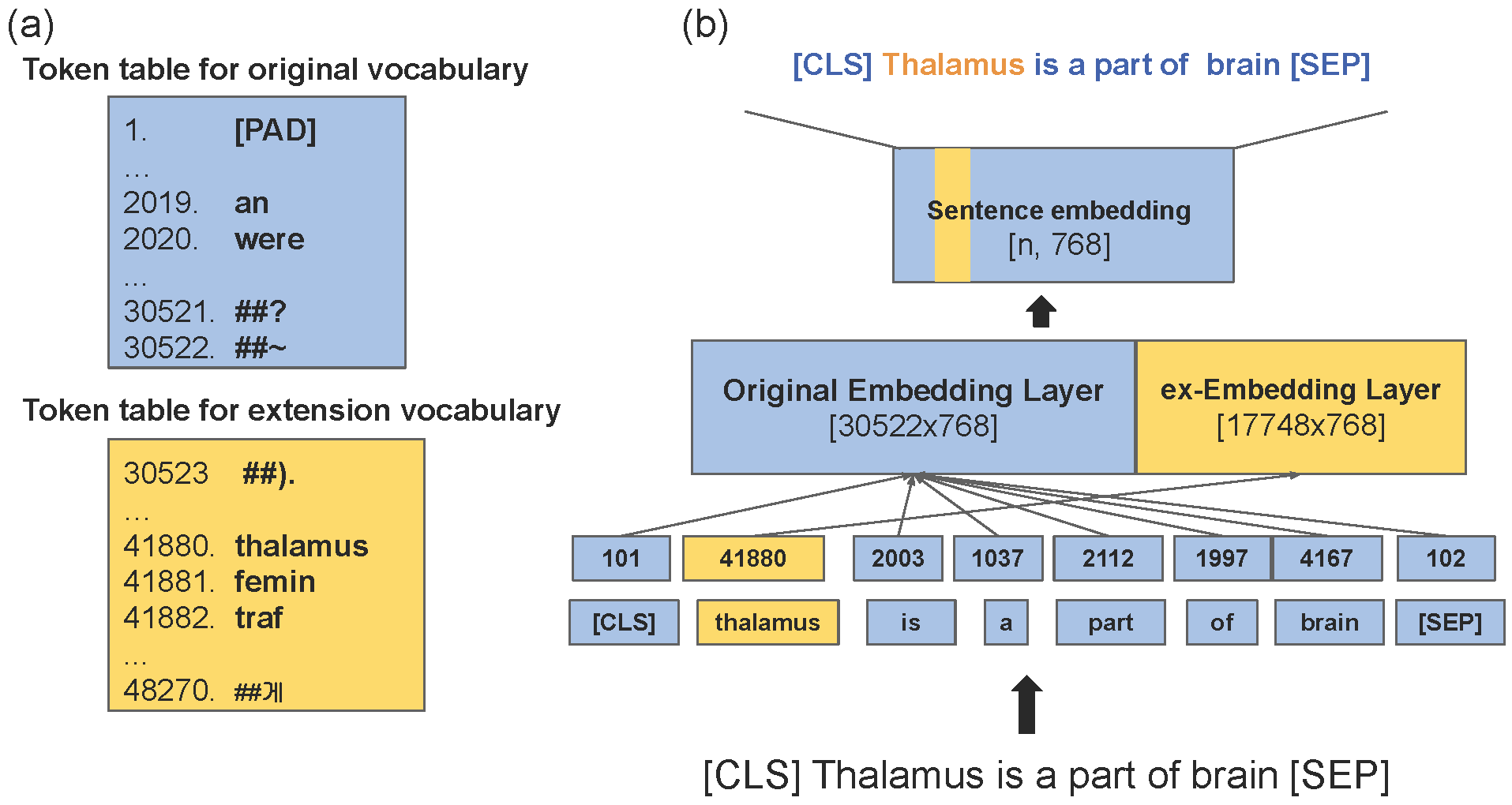

exBERT: Extending Pre-trained Models with Domain-specific Vocabulary Under Constrained Training Resources

The 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP 2020)

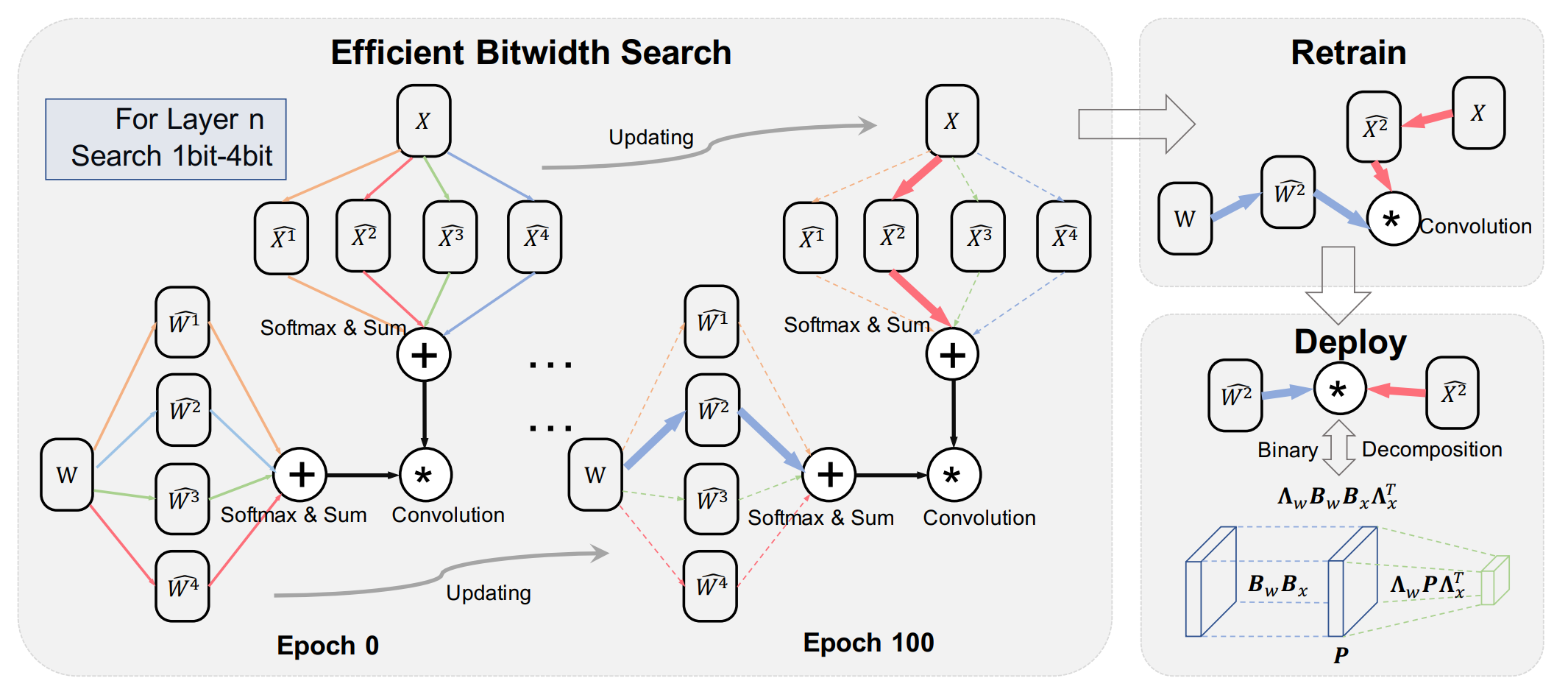

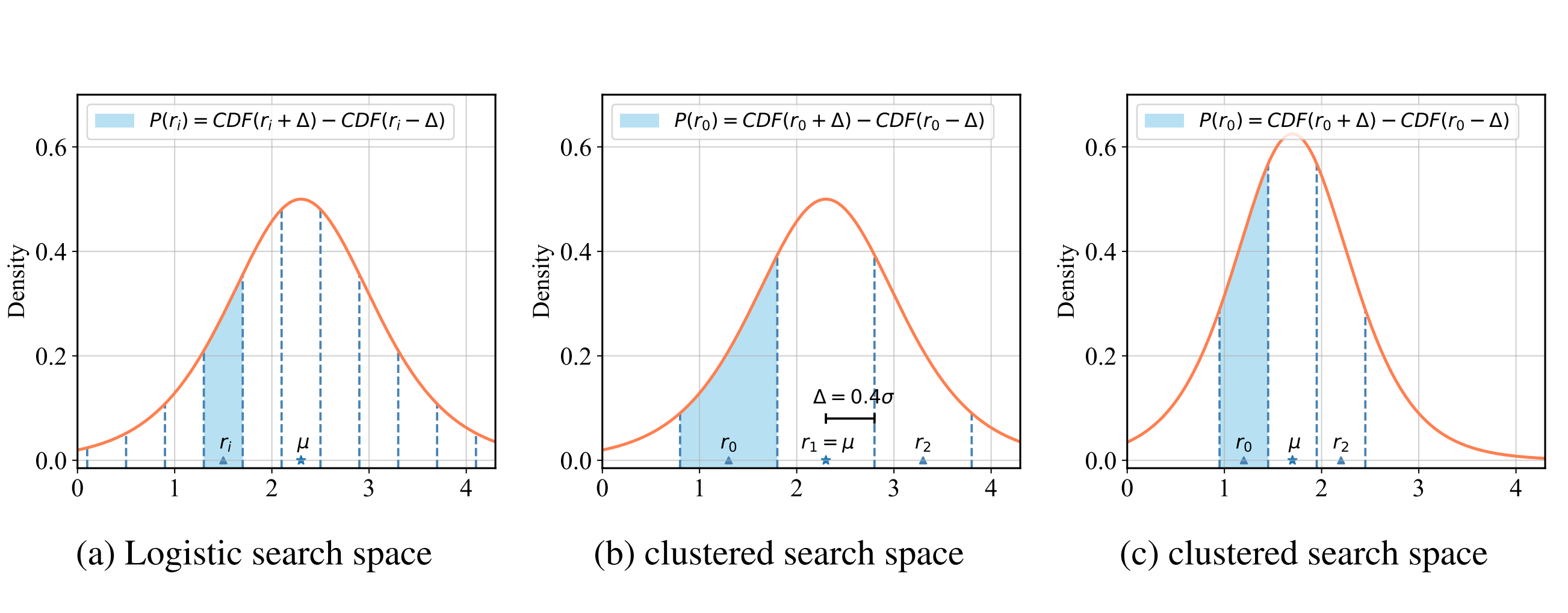

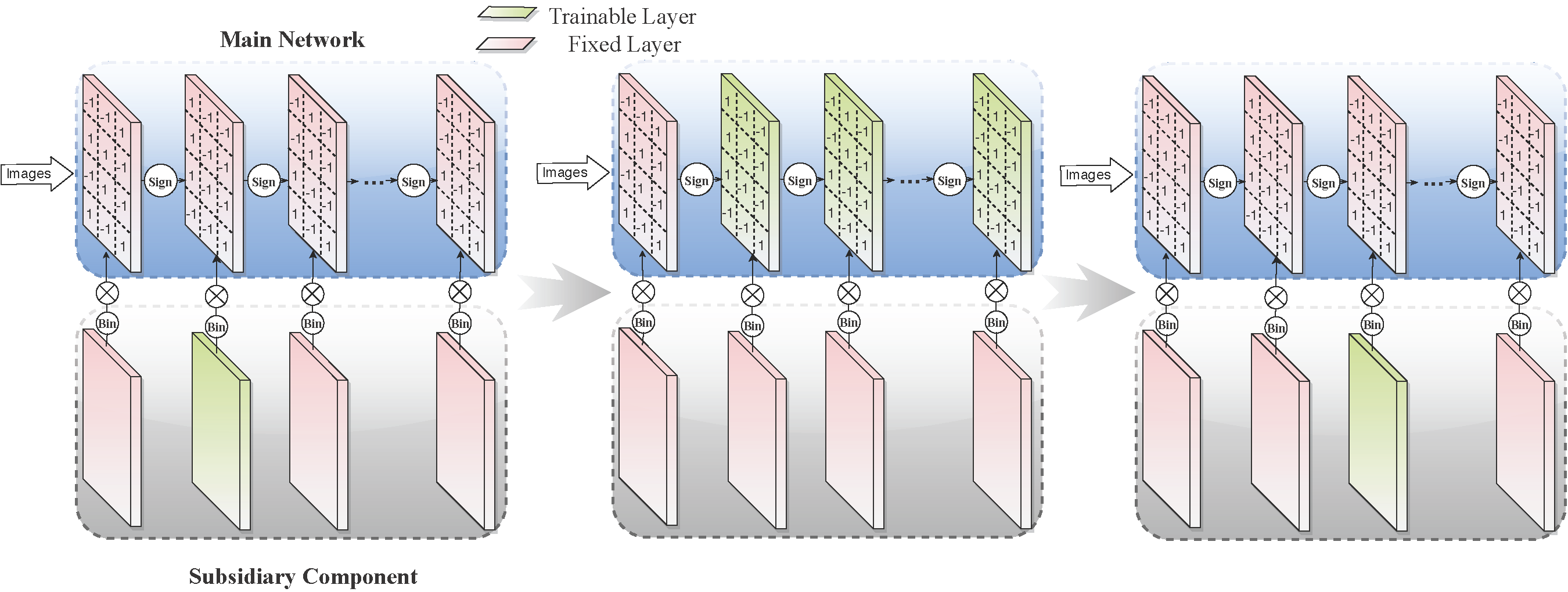

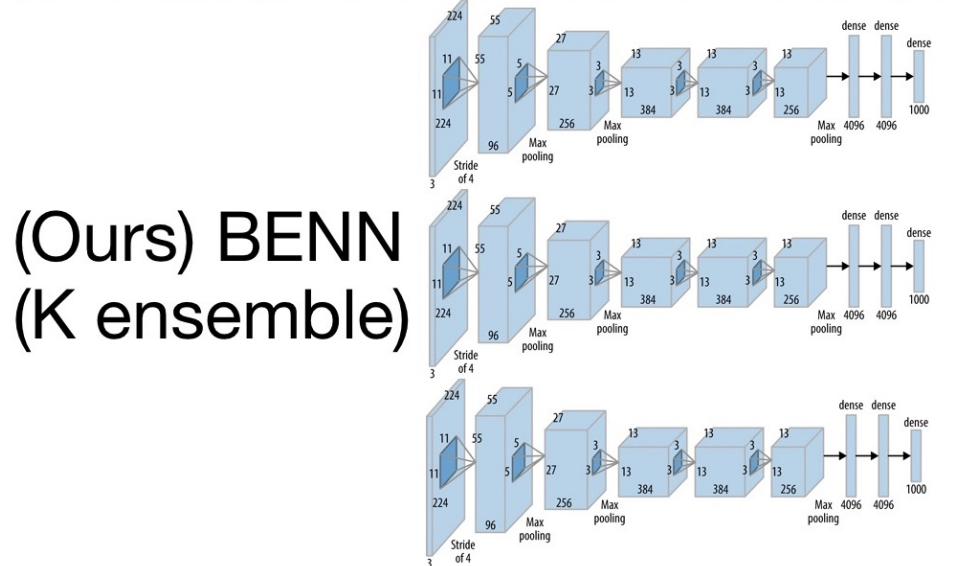

Differentiable Dimension Search for Binary Neural Networks

1st Workshop on Neural Architecture Search at ICLR 2020 (ICLR 2020 Workshops))

Learning to Prune Deep Neural Networks via Layer-wise Optimal Brain Surgeon

Thirty-first Conference on Neural Information Processing Systems (NeurIPS 2017)

Academic Services

- Reviewer/Area Chair for ICML, NeurIPS, AAAI, IJCAI, CVPR, ICCV, ECCV, EMNLP, ACL

- Co-organizer of the 1st international workshop on The Practical Deep Learning in the Wild (PracticalDL-22) at AAAI 2022

- Teaching Fellow of Harvard CS242 Compute at Scale